Mere hours after I posted my welcome post last Sunday, OpenAI revealed its newest public AI tool - Deep Research. It is “an agent that uses reasoning to synthesize large amounts of online information and complete multi-step research tasks for you.” Incidentally, my current job title is “Public Policy Researcher” and the title of the job I’m starting in March is “Analyst”. If someone asked me what I do for a living I could respond with OpenAI’s description of Deep Research and cover all my bases. Naturally, Sunday was a bit scary for me.

Some people describe their experience with Deep Research as the first time they “felt the AGI.” AGI here means Artificial General Intelligence, something nearing the capabilities of a human mind. Whether or not these feelings map onto any truth, it does seem like Deep Research is capable of incredible things.

Here are some examples from across the Twitter-sphere.

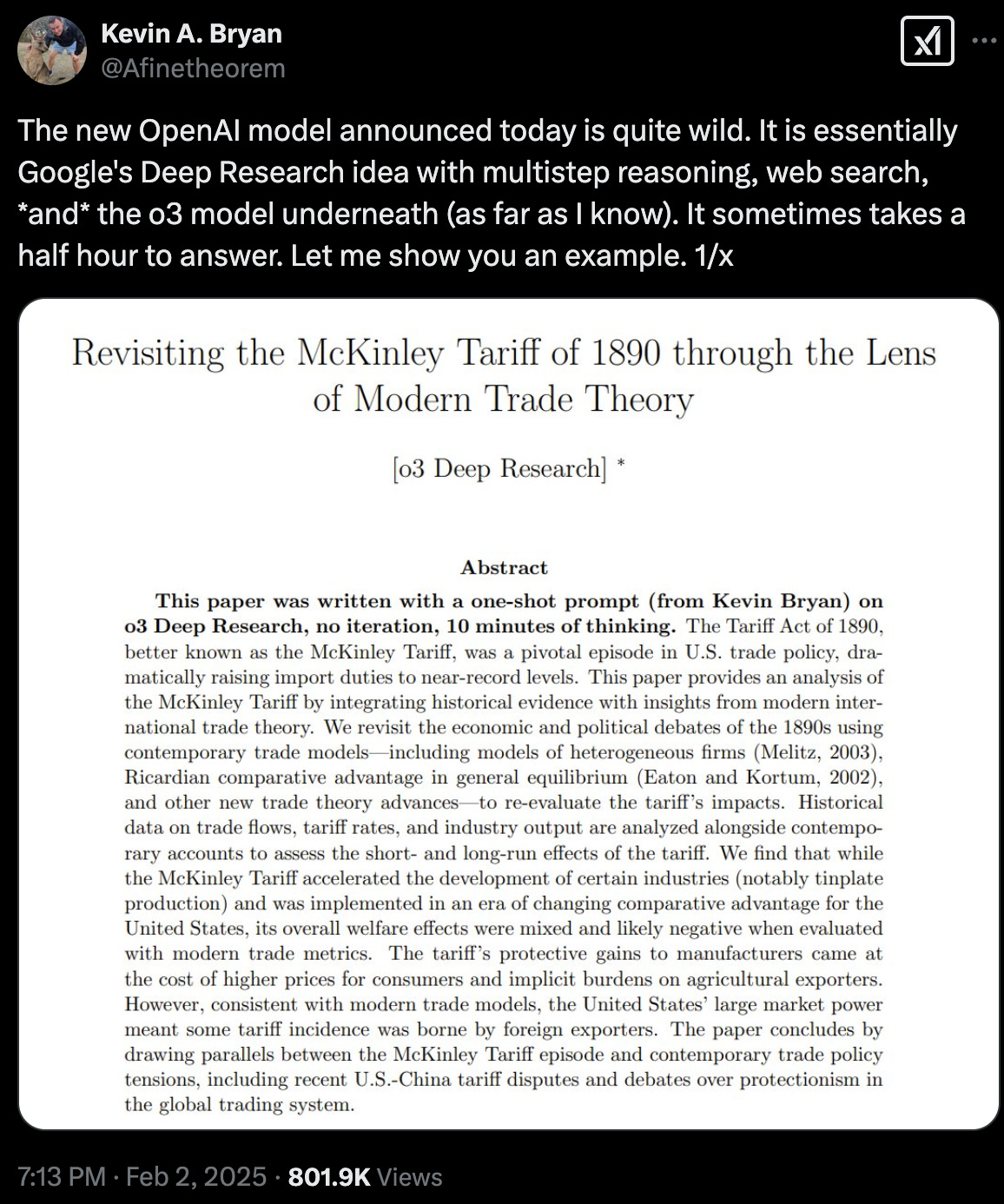

Professor of Strategic Management at the University of Toronto Kevin Bryan uses Deep Research to produce a draft of an academic paper about tariffs and the economics of trade.

His thread notes that he has received papers to review for journal publication that are worse than this product that took Deep Research 30 minutes to produce.

Since Deep Research is internet search enabled it is far less prone to “hallucinations” than previous iterations of ChatGPT.

Later in the thread, Bryan predicts that academics could “publish papers they “wrote”' in a day” in “B-level journals”

Professor of Mathematics at the University of Pennsylvania Robert Ghrist believes Deep Research can now automate literature reviews

An anecdote from Ethan Mollick, a professor at Wharton.

These reactions and use cases are startlingly impressive. For the first time, I’m beginning to see how tools like Deep Research will disrupt entry-level white-collar work. To date, the main effect of generative AI tools on office work has been elevating the performance of people willing and able to integrate them into their workflow. In the not-too-distant future, the main effect may be to displace workers.

Still, I don’t think this is an inevitability, for two complementary reasons. First, Deep Research and the AI computing necessary to respond to queries are for now scarce resources while human capital is relatively abundant. Right now Deep Research is only available to OpenAI customers that pay $200 a month. This subscription only permits users to ask Deep Research 100 questions each month. At first, this sounds like a lot, but this limit forecloses the quality that makes AI so valuable in day-to-day knowledge work: iteration. When I use CoPilot for programming tasks I only get the answers I’m looking for after iterating through my problem over and over again and pushing CoPilot on its errors. It works fast and gives short responses. Compared to iterating through programming problems with a human CoPilot is much more effective. With a 100 query limit and who-knows-how-much compute cost OpenAI is eating on the backend to deliver this product, I don’t think iteration with Deep Research on long research products has the same comparative advantage against iteration by human teams. So much depends on the initial prompt, which sends Deep Research off into the ether like an information retrieval rocket on one path. My intuition is that initial prompts are rarely perfect and the ability to adapt on the fly to new information needs is more important than how fast the rocket ship can go. For now, I think humans maintain the comparative advantage here.

Second, people just instinctually do not trust AI. I think this is a hangover effect from most people’s first interactions with ChatGPT - it was interesting, but not super useful, and not replicating human-level intelligence. I would bet the term the average person associates most with AI is “hallucination.” My prediction for the next several years is that this first impression will be very sticky because the average person will treat “AI” generally as if it is a single person or entity. Imagine if you received a piece of work from a human employee that was littered with falsehoods, sloppy work, made-up citations, and boring prose. I bet it would take you a very long time to trust that person’s work product again, even if it magically became superior overnight. This is how most of the world treats AI.

The problem is that’s a poor analogy because AI isn’t like a single employee who might or not get better at their job over time. AI models are distinct. The AI agents that run on the models are more like separate individuals of wildly varying abilities. And their ability is factually increasing rapidly over time. Still, whether or not AI agents can so obviously (and cheaply) surpass humans at knowledge work to overcome the bad first impression made by their inferior ancestors is an open question.